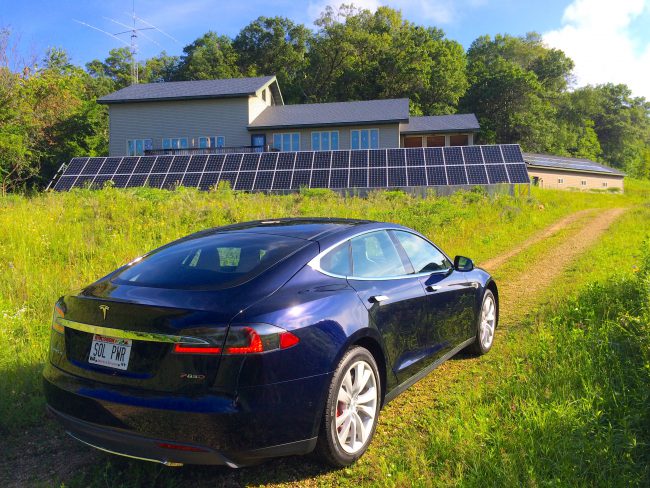

November 2017 – “Romantic Comedy Challenge”

We were provided instructions and a voice track (narration and character-dialog) by an imaginary director who is looking for a score for romcom trailer. The “director” set a one-week deadline! I sweated this one a bit, given that this is also Fall Projects season here at Prairie Haven.

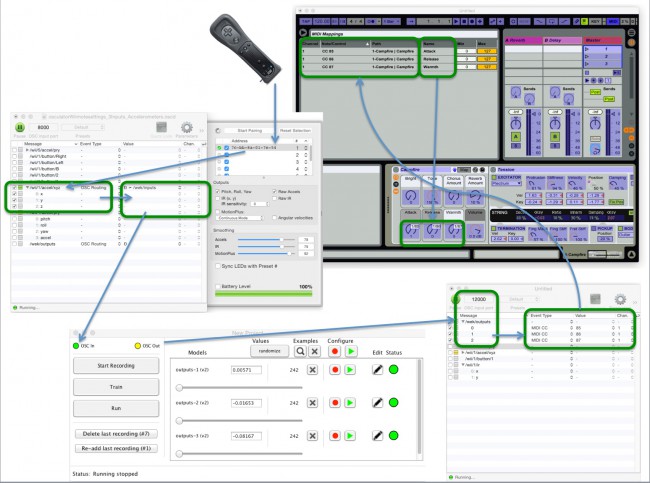

Pretty darn cool challenge. This was by far the most complex mix I’ve done in quite a while, 26 tracks across 13 scenes (in 2 minutes!).

Some fun! Here’s the link to the 2 minute trailer.