I made a video version of this that you can watch here:

But I like this kinda thing as a blog post, so here is is. Click on the pictures to embiggen them.

It starts out with the build (and takes a detour into revising the assembly the Vevor rake I bought to sit in my quick attach 3 point hitch setup).

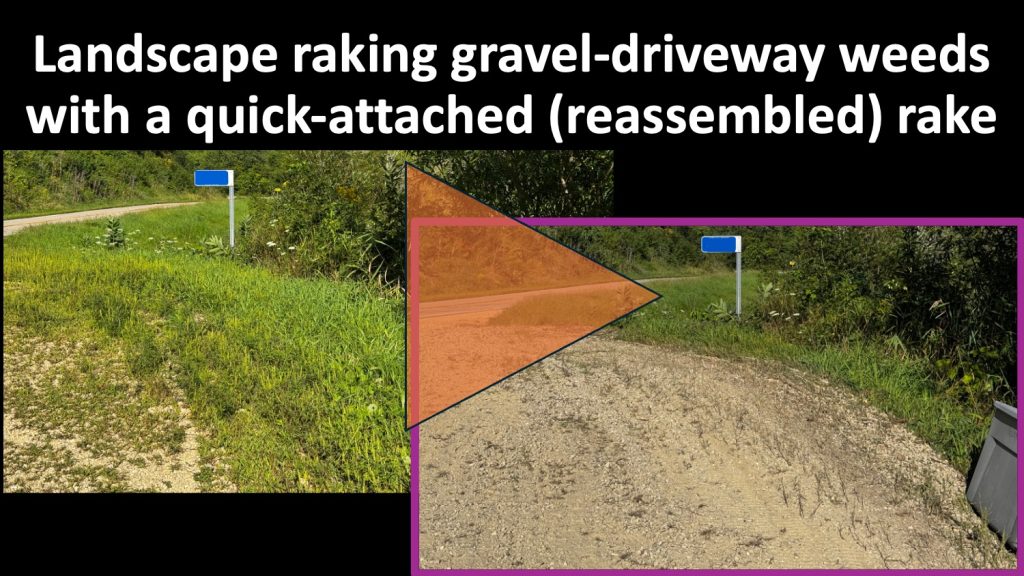

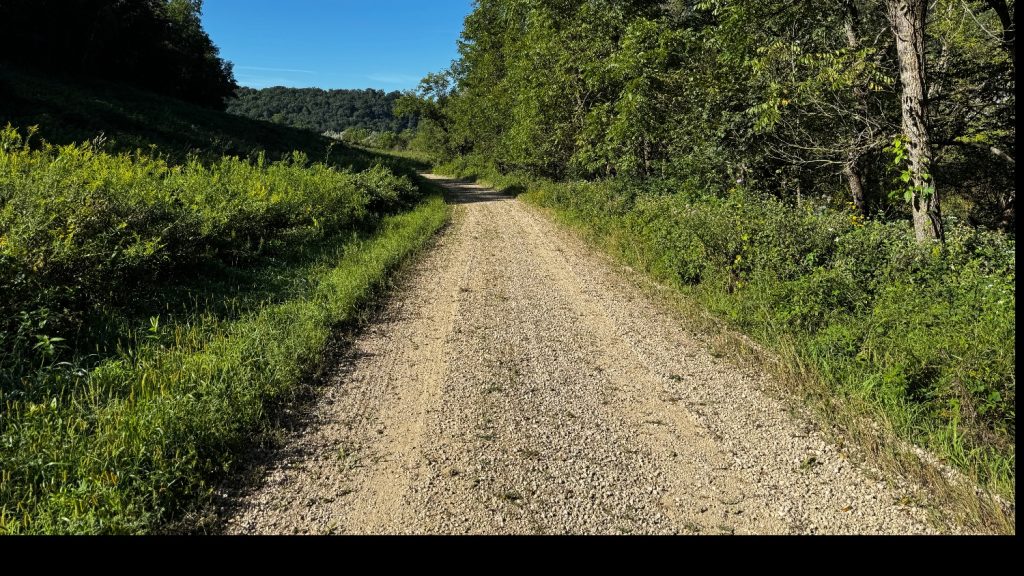

The last part is a bunch of pictures before and after a day of raking the summer weeds out of the driveway. I found this to be a LOT easier/better than using the land plane to do the job. So from now on, I’ll use the land plane in the spring and the rake for the rest of the year. Here’s a link to my post about the land plane.

The story begins with the 140 pound box crushing my little deliveries tub at the top of the driveway. Awww.

We rassled it into the bucket and hauled it to the garage…

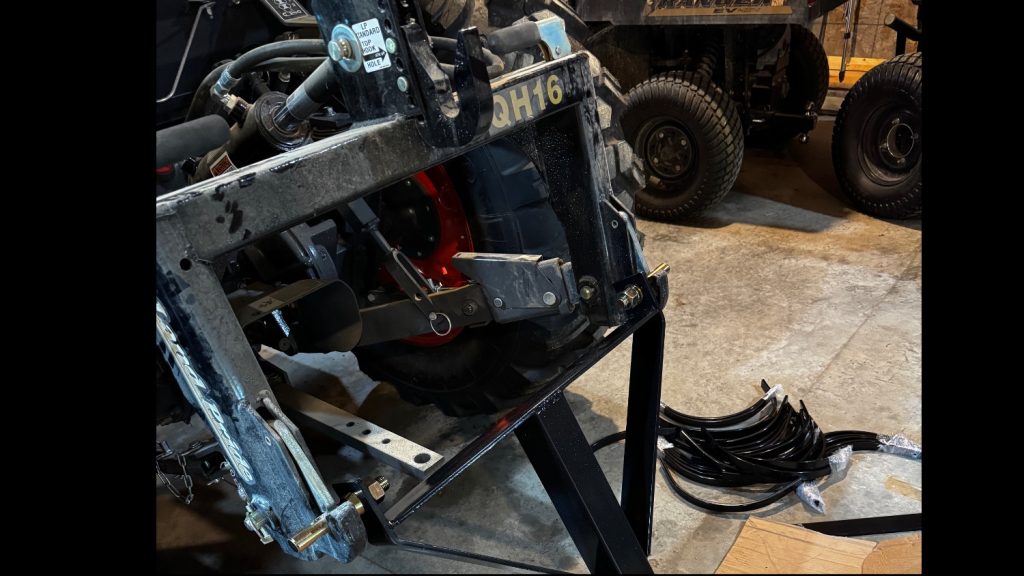

… and I started building it on the tractor. Step 1 looked fine, the first piece dropped right into the quick-attach 3-point hitch. But…

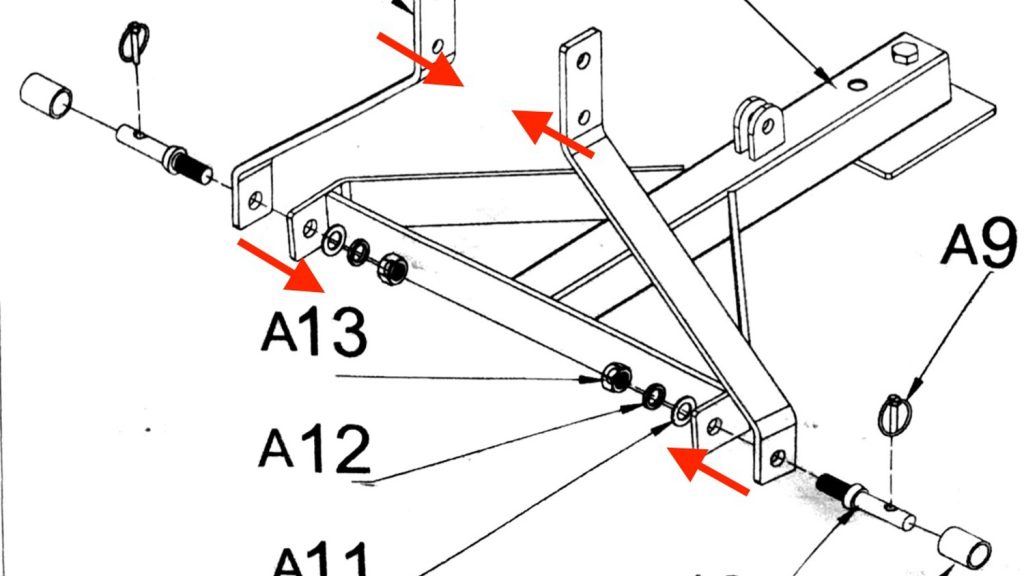

… which creates two puzzlers. First, assembling according to the instructions made it too wide for quick-attach. AND the top connection won’t work because they have you mount the diagonal bar right in the place where the top hook of the quick-attach wants to go. More pictures about both of those in a minute…

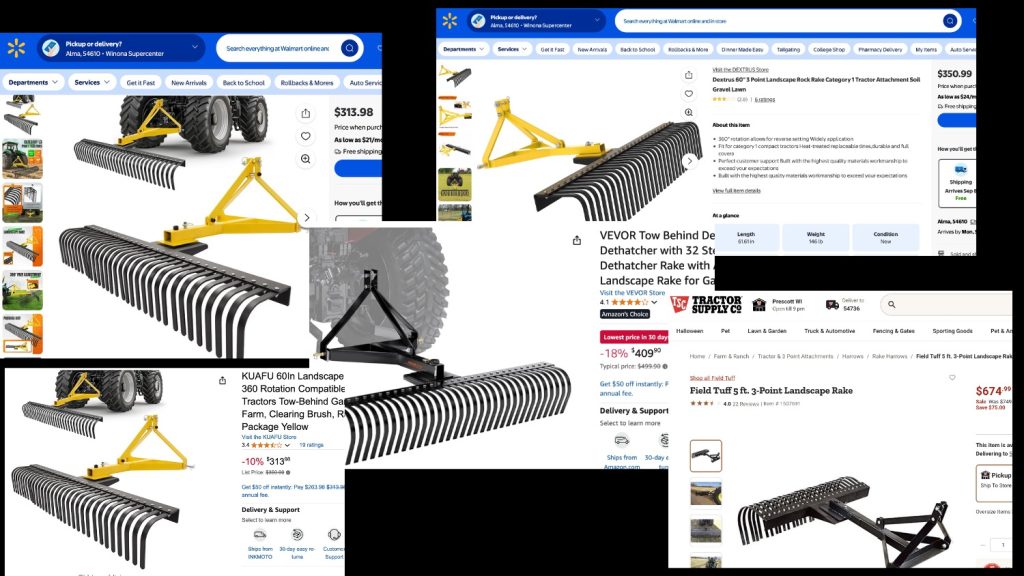

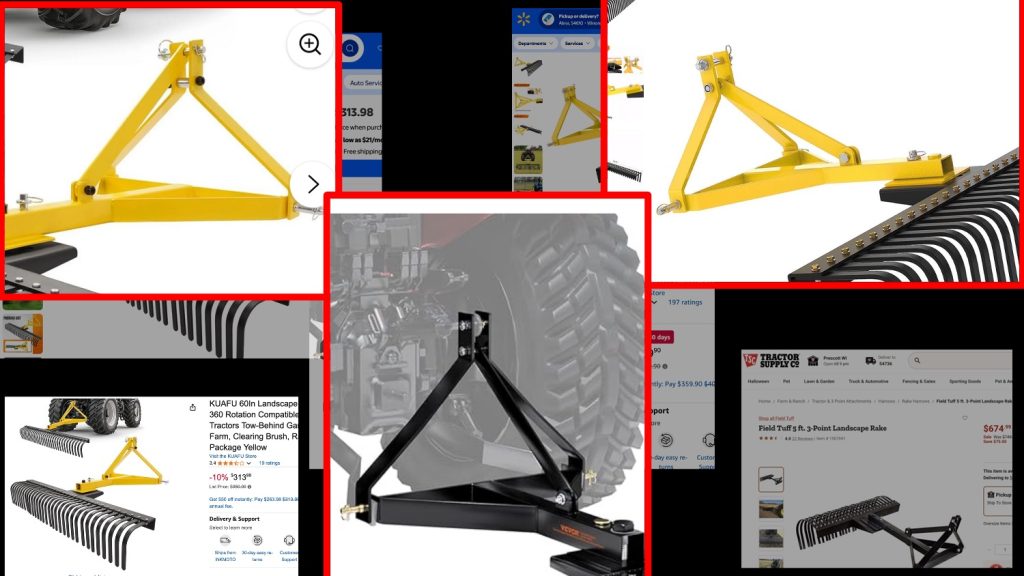

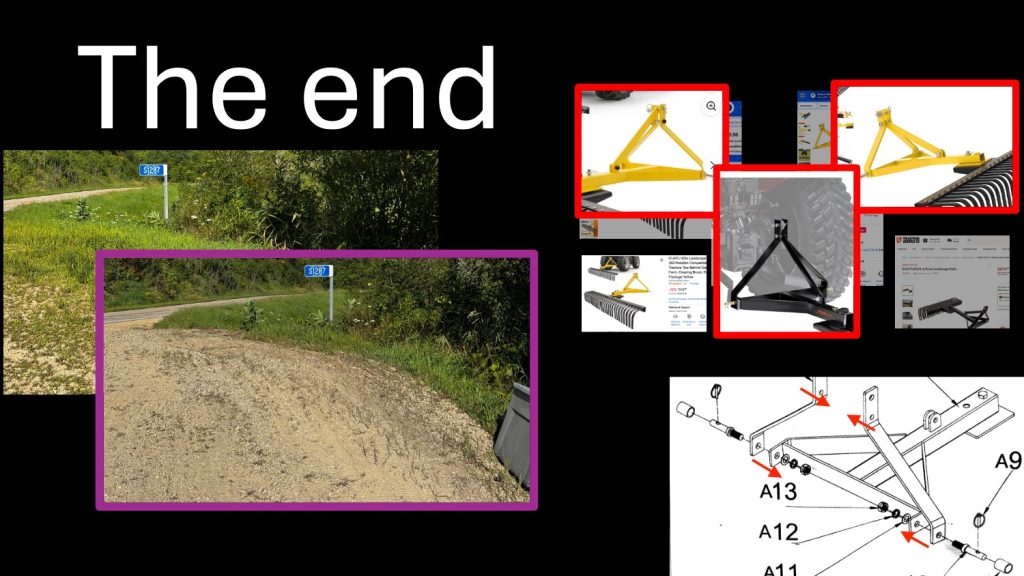

Here’s the detour that shows how I got it to fit in my quick-attach. First thing — there are lots of versions of these out there that are all slightly different. The one I bought was the Vevor (which sits in the middle of this picture).

The tricky bit is that each of those assemble their 3-point slightly differently, so my scheme may not work for all of them. For example the one in the upper left looks like the top hook will work — they’ve mounted the diagonal above the the bar that the top-hook wants to slide into. But they still assemble the bottom so it looks like it will be too wide. Best plan would be to get confirmation from your vendor that it’ll work with a 3-point before you buy it. Second best would be to get detailed measurements and confirm for yourself. Third best is cross your fingers and hope my scheme will work for you.

Here is how I handled the “too wide” problem. I moved the two diagonal bars from the outside to the inside of that bottom piece. That got me back to a width that fits in the bottom of the quick attach.

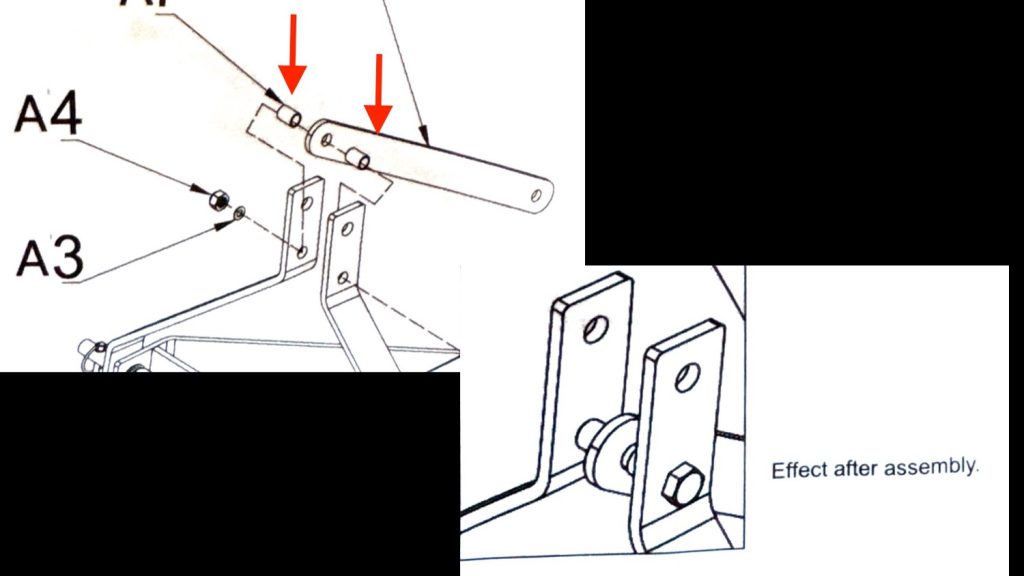

To make room for the top-hook of the quick attach, I assembled the top bolt with the diagonal bar on one side and then the two bushings next to each other, which made enough room for the top hook to come up and engage.

Here’s a string of happy pictures showing how it now fits in the quick attach.

This is one side of the bottom with a little bit of space…

This is the other side, snug but engaged.

This is a side shot showing that the top hook can now slide up past that moved-to-the-side diagonal piece.

Here’s the assembly. Two bushings, THEN the diagonal brace. Plenty of room for the top hook now.

Back to the build-out pictures…

My only minor complaint is that the rake can only be rotated in 45-degree increments. I’d like less-aggressive angling options and may drill another hole or two at some point. For now, I’ve found that swinging the 3-point hard to one side lines the (60″) rake up with the edge of the tractor tires and gives a little bit of pull-to-the-center angling. Not too bad.

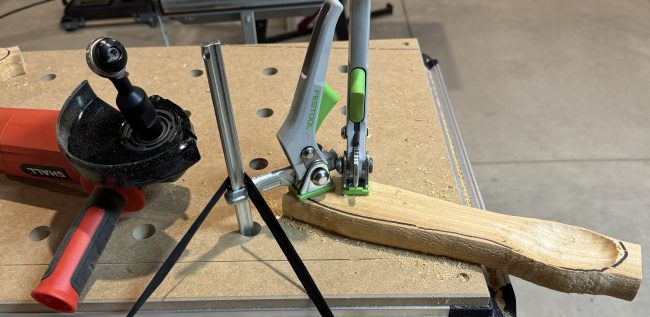

Putting tines on…

… gets pretty tedious and Marcie rescued me at the end by being a 3rd hand.

Done.

On to the before-after pictures. I couldn’t find any good ones out on the ‘net. So here’s the result of about six passes over the driveway — took about an hour each, so about a day to do about 3/4 mile of gravel driveway weed pulling. Sure beats working.

A great project. With a nice puzzler in the middle.