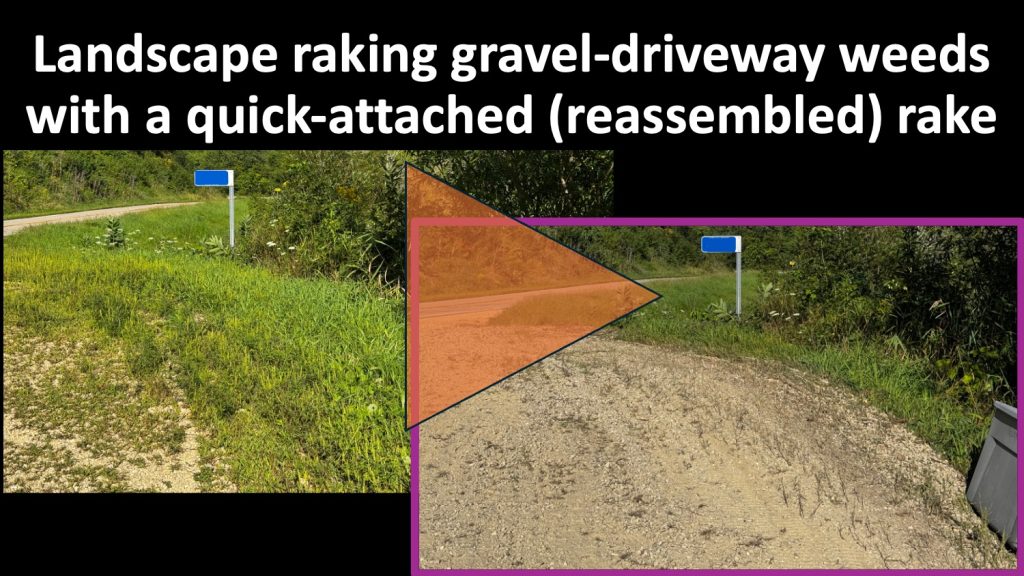

My nifty new BirdOMatic bioacoustic data analysis system is up and running! To celebrate, I asked ChatGPT to SWAG how long my Apple II would have taken to analyze the bioacoustic audio data. Pretty much fun. Scroll to the bottom for the punchline….

By the way, ChatGPT had to be coaxed through this a little bit. It got the calculation of the compute ratio between the Apple II and the Mac Mini wrong by a factor of a thousand (those darn millions and billions…) the first time through. You probably don’t want ChatGPT to reconcile your checkbook without looking over its shoulder.

Here’s the prompt to ChatGPT:

compare the computation, memory and disk specifications of my current 2024 Mac Mini (M4 processor, 24 gb RAM memory, 80TB of disk) with my Apple II (purchased new around 1980, 48 kB RAM memory, 16 kB language card).

then ignore the many impossibilities and estimate the approximate time it would take the Apple II to process a one hour WAV file of bird calls — a process that takes about 4 seconds on the Mac Mini.

then note that i have about 40,000 audio files in the dataset. using the prior estimates, extrapolate how long both computers would take to process all the files.

Here’s the response:

1. Hardware comparison

Computation

| Machine | CPU | Clock | Approx. performance |

|---|---|---|---|

| Apple II (~1980) | MOS 6502 | ~1 MHz | ~0.3–0.5 MIPS |

| Mac mini (2024, M4) | Apple M4 (multi-core, SIMD, GPU) | ~4 GHz | ~10–20 trillion ops/sec |

Compute ratio (order of magnitude):

~

100 billion×10 million x more computational power for the Mac mini (ChatGPT goofed on this one)

(very conservative for signal processing workloads)

Memory

| Machine | RAM |

|---|---|

| Apple II | 48 kB + 16 kB language card = 64 kB |

| Mac mini | 24 GB |

Ratio:

~400,000× more RAM

Disk / Storage

| Machine | Storage |

|---|---|

| Apple II | ~140 kB floppy |

| Mac mini | 80 TB |

Ratio:

~570 million× more storage

2. Single-file processing time

Given

- 1-hour WAV file

- Mac mini time: ~4 seconds

- Ignore all practical impossibilities for the Apple II

Extrapolated Apple II time

Using the ~10,000,000 × compute gap:

4 seconds × 10,000,000

= 40,000,000 seconds

Convert to years:

40,000,000 ÷ 31,536,000 ≈ 1.275 years

🐦 Apple II: ~12–13 years per file

3. Dataset-wide extrapolation (40,000 files)

Mac mini

40,000 × 4 s = 160,000 s

Conversions:

- ≈ 44.4 hours

- ≈ 1.85 days

✅ Mac mini total time: ~2 days

Apple II

1.27 years/file × 40,000 files

≈ 508,000 years

🦖 Apple II total time: ~500 thousand years

4. Final summary

| Machine | Time per file | Time for 40,000 files |

|---|---|---|

| Mac mini (M4) | ~4 seconds | ~2 days |

| Apple II | ~12,700 years | ~500 thousand years |

5. Perspective

One weekend on your Mac mini equals half a million years on an Apple II.